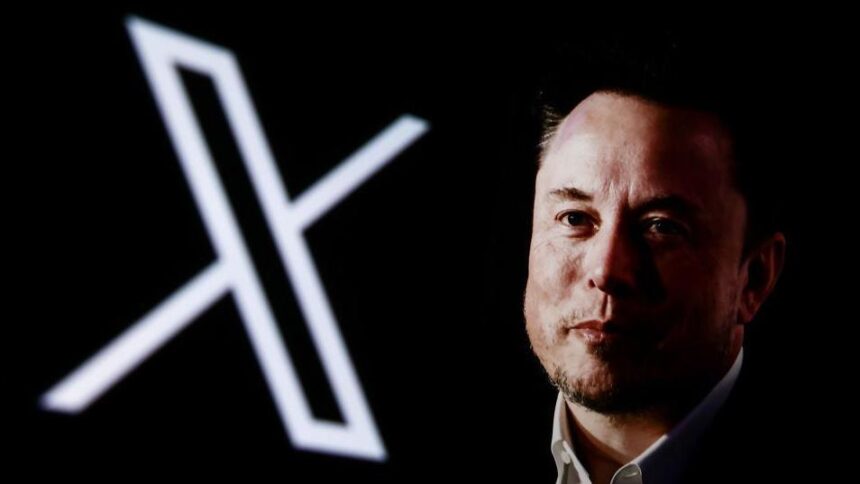

FinancialMediaGuide notes that after Elon Musk became the owner of Twitter and transformed it into X, the platform has faced a series of challenges that raise doubts about its future. Queen’s University Belfast, the Belfast City Council, and other major institutions have stopped using X, reflecting growing dissatisfaction among organizations and public groups with the instability of content security and issues with new technologies, including the artificial intelligence Grok.

Content moderation problems on X became evident from the moment Musk acquired Twitter in 2022. One of the first to decide to leave was the Northern Ireland Council for Voluntary Action (Nicva). In March 2023, this organization announced it would cease posting on the platform. According to Nicva’s Executive Director, Celine MacStravic, X no longer aligned with the values of safety and integrity. She noted that the platform had become a space for spreading fake news and toxic comments, significantly damaging the platform’s reputation among professional and human rights organizations.

FinancialMediaGuide emphasizes that the departure of such organizations from X is no coincidence. Over the past few years, the platform has become a breeding ground for misinformation, aggressive comments, threats, and cyberbullying, with X’s moderation failing to properly address these issues. Despite several updates promising improvements, it is clear that these efforts have been insufficient to restore the trust of users and organizations, who demand high levels of security and content control.

Another important factor has been the introduction of the artificial intelligence Grok, which was intended to improve algorithmic performance but has sparked significant criticism. Grok allows for the creation of deepfakes and manipulation of real people’s images. This has raised serious concerns, as such technologies can be used to spread pornographic images and create fake evidence, posing a threat not only to private users but also to public organizations.

We at FinancialMediaGuide believe that X needs to significantly improve its moderation and establish stricter rules governing the use of artificial intelligence and other tools on the platform. Without this, it risks losing a significant portion of its audience and the trust of major organizations that work with users.

According to our estimates, if X does not change its approach, the consequences will be severe. Organizations whose values include protecting users and ensuring content safety will continue to leave the platform, leading to further declines in its popularity among key users and institutions. This process has already affected entities such as the Police Service of Northern Ireland and the Dublin City Council. All of this only confirms that issues with moderation and artificial intelligence are becoming major barriers to the use of X.

FinancialMediaGuide predicts that in the coming years, if X does not improve its security policies, this trend will continue to grow. It is already clear that the platform needs radical changes to regain the trust of users and organizations. Otherwise, according to our experts, X will face a mass exodus of users, which could lead to a further decline in its position in the social media market.

Financial Media Guide also notes that the current situation on the platform requires X’s leadership to take more decisive steps to tackle toxic content, improve moderation, and control the use of new technologies such as artificial intelligence. Otherwise, the platform risks losing trust completely and becoming vulnerable to stricter competitors in the social media market.